By extending our thinking to the future, and naming systemic barriers we can gain new insights to creatively tackle misinformation.

This article was co-authored by Lisa Rudnick and Mille Bojer.

Overview

Digital information technologies have the potential to benefit individuals and societies in many useful and important ways, but their propensity to amplify and accelerate misinformation leads to vulnerabilities on a global scale. This scale is possible because digital technologies have created conditions in which information:

- spreads further, faster across vast networks of interconnected channels and platforms;

- can be micro-targeted to increasingly specific groups and profiles; and

- can be segmented so effectively that different realities can be presented to different people.

These online conditions have many real-world impacts. In particular, they play an important role in the erosion of social cohesion.

By exploiting tensions and fears and creating confusion around issues of deep importance for wellbeing, sustainability, and stability, digital misinformation fuels polarisation, and makes it difficult to recognize which sources of information should be trusted.

Likewise, the more difficult it becomes for people to discern fact from fiction, the more easily doubt is sown - in science, government, social institutions, and in one another. In turn, this fundamental erosion of trust makes us more susceptible to misinformation itself and the various uses to which it is put (i.e. in disinformation tactics). This affects not only the choices we make as private citizens, but also the decisions and directions formulated and advanced by governments, multilateral organizations, and other influential institutions.

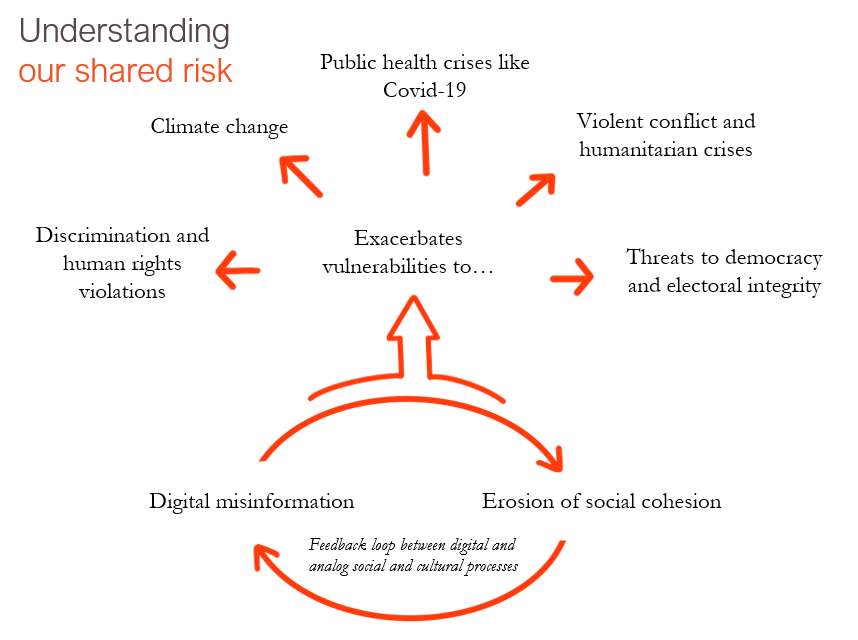

This dangerous feedback loop between digital misinformation and social cohesion exacerbates our vulnerabilities to a wide range of hazards, including but not limited to threats to democracy and electoral integrity, violations of human rights, violent conflict, polarisation, radicalization, and threats to global public health.

This dangerous feedback loop between digital misinformation and social cohesion exacerbates our vulnerabilities to a wide range of hazards, including but not limited to threats to democracy and electoral integrity, violations of human rights, violent conflict, polarisation, radicalization, and threats to global public health.

In an active example, this feedback loop has played a central role in the global “infodemic” catalysed by Covid.

The pandemic has helped shine a light on how, especially during times of crisis, we are vulnerable to the ways in which digital information technologies can be used to amplify existing grievances, exacerbate existing social tensions, and undermine trust in both democratic and scientific institutions and processes.

Indeed, during this period of heightened global vulnerability, unintentional misinformation has flourished across information ecosystems in part because they have been appropriated and accelerated by deliberate and coordinated disinformation tactics. These tactics seek out issues and locations where social cohesion is weakest, and inject them into Covid-related messages, misunderstandings, and rumours, turning them into tools of manipulation and polarisation.

There is widespread recognition of these problematic dynamics and growing expertise on misinformation from a number of fields. Meanwhile, Covid has heightened the attention of new audiences and stakeholders, from everyday people to government ministries, as they endeavour to understand the challenges presented by digital misinformation, their wide-reaching implications, and potential solutions to them.

Key Patterns in Addressing Digital Misinformation

Over the past 18 months, we at Reos Partners have been listening to and participating in discussions with such actors as they explore these concerns from a variety of perspectives. As we have listened to researchers, civil society actors, and representatives of international organizations, technology companies, philanthropies, NGOs and others, we have noticed some key themes and patterns in the way problems and solutions related to digital misinformation are being discussed:

- Information focus: One way people talk about the challenges of misinformation is by focusing on the nature and status of information itself as the problem to be addressed, typically through identifying and/or correcting misinformation across the spectrum of digital information platforms and outlets. From this perspective, solutions involve verification, fact-checking tools, and knowledge products, as well as take-down and labelling practices.

- Technology focus: Another way people talk about the challenges of misinformation is by focusing on the technological dimensions of digital misinformation (such as platforms, algorithms, AI, etc.) as both drivers and solutions to the problem of digital misinformation. From this perspective, solutions tend to focus on adaptations and advances in technologies, as well as the business models and regulatory frameworks that pertain to them.

- Skills focus: A third way involves focusing on building awareness and skills of citizens, civil society, and community journalists for navigating the digital information space in safe and ethical ways (as consumers, producers, and vectors). From this perspective, solutions include educational materials, training and workshops, information, and platforms, often with a focus on media literacy.

- Issue focus: A fourth way is by focusing on the impacts of misinformation around particular thematic concerns, topics, or specific initiatives themselves such as climate change, Covid-19, or specific elections. From this perspective, solutions are needed to combat misinformation, often through counter-messaging.

Each of these ways of talking and working have their own merit and can contribute to addressing some of the challenges we face. Each addresses a key leverage point. But by zooming in on misinformation itself as the issue, they address only part of the problem.

The Imperative of Social Cohesion

Communities and societies are more susceptible to misinformation when social cohesion is weak or fragmented.

Focusing on the content and channels of digital misinformation can cause us to overlook the role of social cohesion as an essential source of resilience to misinformation dynamics. Similarly, a focus on content and channels directs attention to building skills for media and digital literacy, but can leave other skills, such as conflict transformation, non-violent communication, dialogue, civic education, and other areas important for social cohesion, to the side.

Placing all the blame on “bad actors”, an “ignorant” public, and self-interested tech companies can have an impact of further polarisation and disempowerment, rather than engaging a much wider and more diverse set of actors in understanding and addressing the dynamics and impact of digital misinformation and strengthening social cohesion.

In addition, organizing around thematic concerns seems to create a system of fragmented responses and disconnected approaches. The result is a compounding set of vocabularies and varying degrees of understanding of the central dynamics at hand. When we concentrate on the impact of misinformation on our own areas of concern (such as climate change, immigration, public health, or human rights violations), this can prevent us from gaining a view of the common risks that underlie all digital misinformation dynamics, and from working together to address them. It also has the effect of training our attention on present problems and immediate threats, rather than on considering the cumulative (and long-term) effects of digital misinformation dynamics more broadly.

The diverse stakeholders we consulted affirmed that at present there is insufficient collaboration across sectors, perspectives, and disciplines to grapple with the dynamics discussed here, and the common threats they pose. This leaves the potential contributions of cross-sector learning and insights untapped for new and more systemic, synergistic ways of thinking and acting.

Broadening collective attention from a focus on misinformation to a systemic perspective that centers social cohesion may help address some of these gaps and create new grounds for the transformative systems change that is needed. By attending explicitly to the feedback loop between digital misinformation and social cohesion as a core concern (rather than positioning it as a potential outcome from focusing on other concerns), we can broaden our thinking about the most crucial problems and solutions beyond the digital space. By reimagining who we think of as key stakeholders, we can bring new actors together to collaborate on new questions and challenges. By extending our thinking to the future, and naming systemic barriers and divergent interests, we can gain new insights and work to address them creatively.

The Shared Realities Project

To these ends, we have initiated the Shared Realities Project, a multi-local and global initiative that aims to enhance social cohesion and resilience in an age of mis- and disinformation. The Shared Realities Project, championed by Reos with a group of partners, applies the Transformative Scenarios Process to develop narratives of the future in diverse geographical contexts in order to enhance visibility around the evolving local, transnational, and global drivers and widespread implications of digital misinformation for social cohesion. These narratives will inform local and global level strategies to enhance and safeguard social cohesion, resilience, and preparedness. We are currently in the process of seeking partners for this initiative including funders, local convenors, advisors, and participating stakeholders. To learn more please contact info@sharedrealities.org.